1 Simulacra

Socrates: “I would have you imagine, then, that there exists in the mind of man a block of wax, which is of different sizes in different men; harder, moister, and having more or less of purity in one than another, and in some of an intermediate quality.”

Theaetetus: “I see.”

Socrates: “The image of the waxen tablet having different qualities of wax. Let us say that this tablet is a gift of Memory, the mother of the Muses; and that when we wish to remember anything which we have seen, or heard, or thought in our own minds, we hold the wax to the perceptions and thoughts, and in that material receive the impression of them as from the seal of a ring; and that we remember and know what is imprinted as long as the image lasts; but when the image is effaced, or cannot be taken, then we forget and do not know.”[1]

It appears to lie in the networked reality of our access to experiences of novelty as well as to the formation of self-images, to work and think in metaphors and analogies. The impact of technological innovations on our understanding of and acting in worlds is evident throughout the history of thought: Plato ascribes Socrates the rhetoric rendering of a waxen tablet as being a gift from Memory, the personified goddess of the corresponding human trait. Leibniz has drawn a connection between the mechanisms of clockworks and the laws forming and operating in the universe,[2] and Freud conceived his libido model implementing the functionalities of resistors and flows in electronics and hydraulics.[3]

Throughout the ever-recurring introduction of new electronic communication devices, storages and tools for the automated processing of formerly cognitive labour tasks, a vivid field of referential variability and imagery has been at play. The aim to symmetrize novelties differs strategically, depending on the interest group coining the terms: The “data highway“ adapts the notion of individual freedom and flexible corporate trade routes, hence Wallstreet offices on ground level have become more valuable than executive floors, due to shorter distances to access points for high speed trading while often rural areas are left with ‘data beltways‘. [4] The pictoriality of the Cloud, to which data can be sent ‘up‘ in a quasi transcendent processes of online storage, is relatively deceptive. In fact, data-centers are energy-intensive terrestrial conglomerates of rare earths, cryogenic plants and barb wire.[5] Equally predefined by the ‘service providers‘ are the terms used to describe our new connective bonds: friend and follower exert social and organisational relationships and the subscriber is adopted from the preceding media epoch following the introduction of the letterpress.[6]

However, Socrates’, Leibniz’s and Freud’s ambiguous analogies[7] share a deeper, universal interest in the consistency of (human) existence. They argue with figurative arrangements where neither the device nor the described topic is fully linguistically absorbed. Interestingly, the perhaps most striking example of a contemporary parallel image to join the ranks of the above is precisely based and prearranged in a terminological usurpation: formerly an occupational title for assistants executing calculations for mathematicians and astronomers, the term “computer” was an obvious choice to name a universal machine for calculations.[8] Whereas the current significance of the omnipotent tool and ubiquitous interface neglects this semiotic shift, a consequential recurring, short circuited figure roams our technospheres: the computer as brain.

The current heyday of Artificial Intelligence is a hotbed for controversial associations, articulated for example in anthropomorphic robot-imagery in journalistic essays picturing machine intelligences with originally non-communicatively organized shapes (e.g. data centres), or in the technological arms race of corporations and states, where large investments are being devoted to research programs associated with the term.[9] Considering the scope to which we are confronted and organized by AI in our existences (communicative interaction, navigation, commerce, medicine, reception of art,…) reductive comparisons like the brain–computer analogy may seem confining and short in scope. Hence, the critical mantra coined by AI symbolists in the 80ies – “We do not build planes like birds, so we do not build computers like brains” – is inevitabely found in the reactivation cycle.[10]

However, from the early beginnings of computer engineering until today, the uneven pair never lost appeal by its promise for a self-image, based on the scientific „general agreement that the information-handling capabilities of biological networks do not depend upon any specifically vitalistic powers which could not be duplicated by man-made devices“[11] as Frank Rosenblatt, inventor of the first neuromorphic machine frames the initial position.

Amongst the many research endeavours in the field of AI most seem to be engaged in the development of tools for 2nd order observations or in the ‘traditional‘ occupation of the automation of cognitive labour – i.e. rather in the construction of airplanes than in brains. However projects like the Heidelberg based research initiative BrainScaleS, continued within the framework of the EU funded Human Brain Project (HBP) fully embrace the approximation. The essay will draw connections between the concepts of early computer pioneers and the current research on neuromorphic computing within the HBP and examine strategies and assumptions through concepts on (human) intelligence.

2 Black Box Reverse Engineering

“What is being suggested now is not that black boxes behave somewhat like real objects but that the real objects are in fact all black boxes, and that we have in fact been operating with black boxes all our lives.”[12]

2.1 Halt and Catch Fire

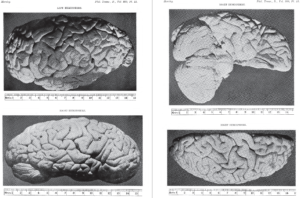

Six days after his death in October 1871, Charles Babbage, mathematician and developer of the Difference and Analytical Engines – precursors of today’s computers – was buried without a brain [Fig 1.]. It had been surgically removed to be studied for characteristics, but up until this day nothing remarkable could be found.[13]

Fig.1 Photographs of Charles Babbage’s Brain from the original report by Victor Horsley

While Babbage’s lifetime achievements, his social rank and attributes, his network of correspondences and publications were evident enough to initiate the examination, the phenomenological analysis of the organ most closely associated with intelligence revealed no rationale for genius or any of the above mentioned social parameters. Reliable assumptions about structure and its correlation to patterns of (inter)action seem to be the core prerequisite for a scientific “duplication” of an organ “by man-made devices”. Alan Turing, the pioneering inventor of the first general purpose computer, the Automatic Computing Engine (ACE), considered himself in the process of building a brain[14] and breaks down the “positive” prospect, hinting at the dilemma at the same time:

“A great positive reason for believing in the possibility of making thinking machinery is the fact that it is possible to make machinery to imitate any small part of a man. That the microphone does this for the ear, and the television camera for the eye, are commonplaces. One can also produce remote controlled Robots whose limbs balance the body with the aid of servo-mechanisms.”[15]

For most organ functions, physical and bio-chemical descriptions are sufficient bases to allow the reconstruction of their functionality. Since our interest in the brain reaches beyond metabolic or coordinative tasks the first concession is the acceptance of the auxiliary formula of the black box as a precaution:

“Even when we say we’re aware, we’re never really aware. There is not enough room in our brain to represent itself. If you kept asking ‘Why did I do what I did. Then you’d ask why did I ask why I did what I did – and how will I ever get out of this loop. And you’d never get anything done. So evolution must have helped to hide these processes form ourselves.”[16]

This quote by the late Marvin Minsky circumscribes not only the humble post-constructivist tenor of scientific perspective on intelligence, it can also be read as a reinstituted interest in concepts of intelligence that not only allow, but necessarily include vagueness. Minsky, indeed the first person to coin the term AI and co-founder of the MIT Computer Science and Artificial Intelligence Laboratory was one of the most influential figures of research in the field. After the development a maze solving apparatus (SCNARC) in 1951, which by its structural aptitude can be labelled the first artificial neural network, he concluded that the research on “neuro-analog reinforcement systems”[17] would not be the promising path for reaching ground breaking results due to its insufficiency.[18]Consequently, the six years later published paper[19] by Frank Rosenblatt describing the promising and successful work on his neuromorphic machine Perceptron, which took into account biological studies of the operations of the frogs eye[20] was soon put behind by Minsky’s critique elaborated in a publication with MIT AI Lab cofounder Seymour Papert called “Perceptrons”.[21] Rosenblatt’s approach, which anticipated much of todays techniques applied in artificial neural network computing was supercooled in the scepticism voiced by the MIT researchers and in the frustrated expectations of politicians and corporate AI investors which leading into a general collapse of funding at the time. The following shortage of research infrastructure and thus scientific advancement between the late 60ies and 90ies has been later described as “AI Winter”.[22]

The predominant alternative route during this period relied on the belief in an internalized logic as the basis of intelligent systems. It was believed that the most efficient way to process external information was through symbolic representation. John Haugeland coined the term GOFAI (good old artificial intelligence) as early as 1985[23] delivering a critical framework for the efforts of AI symbolists, putting them in line with Leibniz, Babbage and Turing that all based their computing machines on mathematical rule sets. Turing identified the obstacle for a sufficient development of a ‘machine intelligence’[24] as a memory problem:

“It is certainly true that ‘acting like a machine’ has become synonymous with lack of adaptability. But the reason for this is obvious. Machines in the past have had very little storage, and there has been no question of the machine having any discretion.”[25]

Intelligent behaviour in the sense of GOFAI would be entirely based on an archive of representations and their symbolic relations. While the logically operating machine can only be disturbed by a mechanical interference, independent of the logical process itself (short circuit, material failure or damage) it can also not fail in fulfilling its intelligible task. I.e., that the substitution of the human computer with its machine pendant ideally reduced the possibility of variability in the computational result to the parameter of system integrity. But Turing already assumed a role for failure in a scenario for a chess-playing machine:

“‘Can the machine play chess?’ It could fairly easily be made to play a rather bad game. It would be bad because chess requires intelligence. […] There are indications however that it is possible to make the machine display intelligence at the risk of its making occasional serious mistakes. By following up this aspect the machine could probably be made to play very good chess.”[26]

…and in a later commentary on his ACE of adaptive plasticity…

“It will also be necessarily devoid of anything that could be called originality. There is, however, no reason why the machine should always be used in such a manner: there is nothing in its construction which obliges us to do so. It would be quite possible for the machine to try out variations of behaviour and accept or reject them in the manner you describe and I have been hoping to make the machine do this. This is possible because, without altering the design of the machine itself, it can, in theory at any rate, be used as a model of any other machine, by making it remember a suitable set of instructions.”[27]

… to finally conclude with an invitation to the psychiatrist and cybernetics pioneer William Ross Ashby to conduct experiments on his system, based on neurological assumptions of the brains plasticity in relationship to memory:

“[…] [A]lthough the brain may in fact operate by changing its neuron circuits by the growth of axons and dendrites, we could nevertheless make a model, within the ACE, in which this possibility was allowed for, but in which the actual construction of the ACE did not alter, but only the remembered data […].”[28]

Infact, most applications currently using AI, or more precisely multi-layered neural pattern recognition are not running on descendants of Rosenblatt’s Perceptron. Rather the structural principle of the Perceptron is realized within frameworks originating in Babbage’s Analytical Engine and Turing’s ACE – the Von-Neumann-architecture[29] — the basic structure of any personal computer, smart phone and most datacentres of today. While the omnipotence of the architecture, operating on a separated memory and processing unit, is evident through its propagation, it’s multiple purpose structure comes, as Turing pre-formulated it, at „the expense of operating slightly slower than a machine specially designed for [a single] purpose.“[30] Consequently the computer of today can indeed rather be compared to the cargo-, passenger-, water-bomber-, recce- or/and warplane, whereas “[a] perceptron is first and foremost a brain model, not an invention for pattern recognition”, as Rosenblatt puts it: Its central “utility is in enabling us to determine the physical conditions for the emergence of various psychological properties”.[31] The fundamental difference between the two models seems to lie in their motivation: process(ing) optimisation and speculative (self-)observation.

2.2 Current Brain Project

2.2.1 Parallel Continuity

In the current renaissance of AI, even the seemingly narrow utility of brain models receive massive recognition and material support. An example is the Human Brain Project (HBP), one of the first two “Future Emerging Technologies Flagships” supported with 1 bn Euro by the European Union.[32] The projects intention is precisely based on the consolidation of research from the fields of neuro- and computer science, physics, engineering and robotics. Its 116 partners (universities, research institutes, companies) are organized in twelve subprojects ranging from “Theoretical Neuroscience” to “Medical Information” to “Ethics and Society” and as many as four platforms dedicated to computing and/or brain simulation.[33]

The central Neuromorphic Computing Platform comprises both a digital and an analogue approach to the development of a brain model: the digital SpiNNaker system resident at the University of Manchester is specialized on parallel real time computing with a focus on low power expenditure and the BrainScaleS system based at Heidelberg University employing analogue electronics with the aim of building a “direct, silicon-based image of the neuronal networks found in nature”.

Both approaches take observations into consideration, made by one of the founding initiators of the HBP, the biologists and neuroscientist Henry Markram. In 1994 he was the first to successfully “patch” biological neurons to conduct measurements about the nature of chemical signal processing and synaptic changes during a postdoctoral fellowship at Max-Planck Institute for Medical Research Heidelberg.[34] His recordings of the electronic signals first enabled the adaptation of the data for (brain) model simulations.

The SpiNNaker project simulates neural signal processing following the principle “[machine] as a model of any other machine”[35] by digitally calculating the current flows and plasticity on microprocessors. Crucial parameters are being represented in milli-Volts or Amperes and eventually in binary code. The physical simulation within the BrainScaleS system is a direct attempt at the reproduction of the biological processes with actual currents running through silicon based neuromorphic chips.[36] Karlheinz Meier, the physicist and central figure in the development of the BrainScaleS model condenses the approach as follows: “The model parameters are represented by physical quantities. […] you represent a voltage by a voltage, and a current by a current and a charge by a charge. The basic difference is, that you’re not moving ions through cell membranes, but you move electrons through transistors. Otherwise it’s the same physics – hopefully.”[37]

2.2.2 Graceful Integration

The use of electronic impulses not only circumvents an expensive form of representation it changes information processing fundamentally:

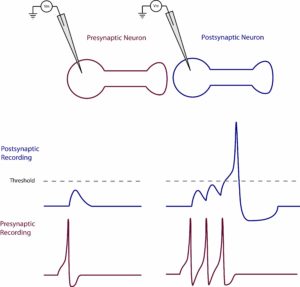

Fig 2: Temporal summation of excitatory postsynaptic potentials[38]

Instead of clocked, binary information (yes/no, on/off, 1/0) a singular pulse manifests itself as an amplitude with a temporal envelope. A sequence of pulses can be added up (summation) to eventually reach the threshold level for the neuron to fire. While the shape of these standard pulses is not variable, the crucial parameters are their time-distance and quantity, which gives new basis for coding in the temporal dimension. At the same time, the outcome of the process comes with a binary quality again: either the neuron fired after it “spiked”, or it did not [Fig2]. Operating with a mixed signal system (analogue and binary) is considered to be the fundamental the basis of the brains large network size and energy efficiency.

In traditional analogue computing (as well as in Rosenblatt’s Perceptron), signal decay has been an essential problem for larger scale systems enabling more complex computations, whereas in digital electronics, the fact that there is no difference between input and output within the very limited variability of signals (either 1 or 0), allows it to be totally scalable.[39] The only threshold, which is now being reached by ever lager supercomputers is the mere fact, that at the end, all digital computation is based on analogue electronics and inert/resistant matter. In larger systems, processing speed decreases to a point where an investment in more processing units would not pay off.[40] Equally errors are more likely and can be fatally consequential in Turing-based systems.

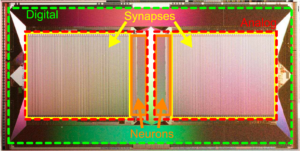

The biological brain on the other hand is fault tolerant: in fact, estimates of the average death rate of neurons in the human brain range between one to two-and-a-half per second. [41] Yet it remains operative. The term coined for this ability – Graceful Degradation – is used both in neuro- and computer science[42] to circumscribe “the ability of a computer, machine, electronic system or network to maintain limited functionality even when a large portion of it has been destroyed or rendered inoperative.”[43] If a single neuron is lost, signals can be distributed via new routes. Together with the local analogue processing, propagating information in the shape of spikes (again binary signals), these fundamental structural elements are assumed to be the basis for the expanse of the human brain network and, in consequence, for the scalability of its silicon equivalent. Through a specially developed application of the Waver-Scale-Integration principle, i.e. the production of wafers carrying multiple pre-connected chips (~400) [Fig.4] this allows the production fully operative processing units consisting of up to 200.000 artificial neurons connected by 50.000.000 synapses [Fig.5].

Fig.3 Photograph of the HICANN (High Input Count Analog Neural Network) chip consisting of 512 neurons and 100.000 synapses ‘framed’ by a digital communication infrastructure enabling connectivity with neighbouring chips.[44]

Fig.4 The BrainScaleS wafer-scale hardware system.[45]

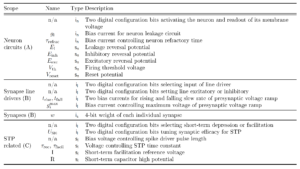

As an analogue system, no software is required to run the neuromorphic model. However, via microprocessors, data about the networks behaviour can be extracted and a set of parameters, defining the network structure, its plasticity and behaviour can be controlled. [Fig.6] reveals a selection of crucial variables revealing not only informatics terminology like “configuration bits” or “readout” but many terms used in neurobiology like “firing threshold”, “reversal potential”, “synapse weight”.

Fig.5: List of analog current and voltage parameters as well as digital configuration bits of the BrainScaleS model.[46]

A total of 44MB of parameter data can be applied to a singular wafer and the current prototype consists of twenty such interconnected modules, totalling up to four million neurons and 1 billion synapses. A larger scale version reaching four floors is currently under construction.

2.2.3 Hummingbird Brain

While it is almost certain that the gap between the size of the biological example (~86 billion neurons) and the brain model will require an even bigger institute and might not be closed in the near future,[47] the operative parameters are quite remarkable for both of the HBPs objectives (to draw conclusions about the brain through simulations on larger scale neural networks and to develop new machines for data processing)[48]: energy efficiency and speed. Based on its physical based processing model the BrainScaleS system works on a remarkably low energy demand in comparison to other approaches in artificial neuronal computing, even if it’s still ~10.000 times less efficient than the biological brain [Fig.7].

Fig.6: Karlheinz Meier: EnergieSkalen. A comparison of different computational approaches and their energy efficiency with an approximation towards the brain from IBM’s BlueBrain to the SpiNNaker System to the BrainScaleS model.[49]

At the same time, the BrainScaleS model works 10.000 times faster than the biological equivalent, allowing the simulation of a day in approximately ten seconds and a year in less than an hour. The magnitude of this acceleration becomes quite vivid in relation to approximate data on crucial time scales for cognitive processes like the recognition of causality or learning in nature and in comparison to simulation on conventional computer architectures [Fig.8]. Due to this acceleration rate Karlheinz Meier has even taken artificial evolutionary studies into consideration.[50]

Fig.7: Karlheinz Meier: ZeitSkalen. Biological timescales of causality recognition, synaptic plasticity, learning, development processes and evolution in comparison to simulations on the computer and on the physical model.[51]

This vast speed, imposed by the physics of the systems silicon material base, are consequential for its field of applicability. For example conclusions for the control of robotics are can be made via the interface of digital space simulations.[52] It is almost impossible to directly link the machine to devices that are quick enough to act in the physical world, although there are examples of research projects in Heidelberg by Korbinian Schreiber trying to achieve ‘accelerated embodiment‘ through the use of high speed motors taken from hard drive readers.[53] The double coined aim of the Human Brain Project – learning about the brain and its processes and developing new means for computation – has both enforce clarification of neurobiological speculations and led to the a necessary experimentation with architectures for computational devices, including significant insights for energy efficiency, scalability and speed. Karlheinz Meier’s legacy will be continued within the framework of the HBP until 2023.[54]

3. #알파 고가 #npng

What seems to be a side aspect of AI thinking and brain inspired computing has been briefly brought up in the time based challenge of linking the BrainScaleS system to robotics. But the relationship between the brain, the body and the significance of interaction in the physical realm draws back to the premiss for artificial intelligence research (at least in the western hemisphere) introduced again by Turing as early as 1951:

“The object if produced by present techniques would be of immense size, even if the ‘brain’ part were stationary and controlled the body from a distance. In order that the machine should have a chance of finding things out for itself it should be allowed to roam the countryside, and the danger to the ordinary citizen would be serious.”[55]

Factually, the renaissance of neural computing is precisely motivated by two aspects, both factoring out the corporeality of biological intelligence: fast electronic processing systems and the vast availability of representative, labelled data for training. Exemplary training sets range from benign (the EMNIST Dataset consisting of handwritten digits derived from postal codes on letters)[56] to politically problematic (Megaface[57], HRT Transgender Dataset[58])[59] or are based on specific tasks like identifying cats, individualised marketing or data mining. Almost all of these models – even if automated – are based on supervised (including foremost classified inputs) and reinforcement learning. But it’s not just the aspect of autonomy of a system “roaming” an environment that renders the “method” for “producing a thinking machine […] altogether too slow and impracticable.” Turing concludes: “Moreover even when the facilities mentioned above were provided, the creature would still have no contact with food, sex, sport and many other things of interest to the human being.“[60]

As mentioned in the beginning, it seems as if the empirical data generated by Babbage during his lifetime is of more value to draw conclusions about his intellect and thinking than composure of his brain. A different fundamental attack on the biological situation of intelligence in the brain would even suggest that the significant part had been inhumed: In their book “How the Body Shapes the Way We Think” Josh Bongard and Rolf Pfeifer question the disembodiment of reasoning by (western)[61] computer science and neurology and advocate the idea of embodied intelligence, based on system theoretical approaches, analysing organizational observations of agents and groups, the role of gravity for locomotion and appearance, ecological niches and terrain and in doing so, quite naturally evading an anthropocentric approach towards the very concept of intelligence.[62] With this approach, the question of how a neuromorphic brain model would be informed, i.e. how its plasticity would behave throughout a lifespan and how it interacts with other organs and/in a relatively radically open environment seems crucial.

This could be the starting point of ever more complex assumptions about the significance of intelligent interspecies interaction or even the role of metabolic processes and symbiogenesis.[63] Taking social preconditions of learning and group or individual development processes into account, i.e. vertical stress[64], training and the role of reward and punishment, expenditure and suffering we can maybe capture the complexity of the follow-up implications in a brief parallel posture of a ground breaking expert computer system and its difference to a human (in part presumably traumatised) equal: In March 2016, Lee Sedol, world champion of the arguably most complex, strategy board game Go has been defeated by AlphaGo, a computer program developed by the Alphabet Group’s AI research company DeepMind. Lee Sedol only won the fourth of five matches, leaving him with a monetary recognition for his participation and the singular victory of 170.000 Dollars. The price money of 1 million Dollars has been distributed by DeepMind amongst charity and Go organisations.[65] AlphaGo has been subsequently given the honorary professional title of a 9-dan, the highest possible rank in Go by the South Korean Go Association (Baduk).[66]

Lee Sedol’s international Twitter account, presumably set up for the tournament in April 2016 can be read as a brief document of learning strategy, social pressure and externalisation and reintegration of reactions winding up in retreat: <https://twitter.cm/lee_sedol?lang=de>. Starting with a short “Damn.” after the first loss, Lee Sedol retweets messages by the one of his ‘human counterparts’, AlphaGo’s co-developer Mustafa Suleyman, and a basic income advocate drawing conclusions about the match the outcome of the first round for the job security. Throughout the tournament Sedol comments shortly on his efforts to learn and defeat Alpha Go and integrates the “Condolences” by Garry Kasparov, the chess champion defeated by IBM’s supercomputer Deep Blue in 1997 as well as the “Huge congratulations” to him after his defeat on the 13th of March by DeepMind’s other co-founder Demis Hassabis on “trending worldwide” on twitter (three top ten positions, all in Korean, one praising AlphaGo). The channels final message, publicized on the same day reads: “We cannot and should not give up on the human mind’s ability to be creatively intelligent.“

The emotional ride, the implications of the match both on the individual–universal diameter seem parameters crucial to our continuities and discontinuities in conduct, memory and identity, yet it’s questionable if their integration will ever be of interest for an externalized observation of (human) intelligence. At the same time, well aware of the current media transition, mediate communications or 2nd order intelligences are being and will be integrated in what we may want to punctually fixate or update as “human entity”. With Jean-Francois Lyotard, the acknowledgement of an acceptance as a thinking counterpart is preserved (for now) to the blackbox of the human as a “suffering machine”:

“The unthought hurts because we’re comfortable in what’s already thought. And thinking, which is accepting this discomfort, is also, to put it bluntly, an attempt to have done with it. That’s the hope sustaining all writing (painting, etc.): that at the end, things will be better. As there is no end, this hope is illusory. So: the unthought would have to make your machines uncomfortable, the uninscribed that remains to be inscribed would have to make their memory suffer. Do you see what I mean? Otherwise why would they ever start thinking? We need machines that suffer from the burden of their memory.”[67]

Bibliography

§ Baecker, Dirk: 4.0 oder Die Lücke die der Rechner lässt. Berlin: Merve, 2018

§ Clarke, Samuel: A Collection of Papers, Which passed between the late Learned Mr. Leibnitz, and Dr. Clarke, In the Years 1715 and 1716. London: The Crown at St. Paul’s Church Yard, 1717

§ Copeland, Jack: Computable Numbers. A Guide. In [id.] [ed.]: Turing. The Essential. Oxford: University Press, 2004

§ Diesmann, Markus: Brain-scale Neuronal Network Simulations on K. Pp.83-90. In International Symposium for Next-Generation Integrated Simulation of Living Matter: Proceedings of the 4th Biosupercomputing Symposium. Tokyo: Ministry of Education, Culture, Sports, Science and Technology (MEXT), 2012

§ Domingos, Pedro: The Master Algorithm. How the Quest for the Ultimate Learning Machine Will Remake Our World, 20 Basic Books, New York, 2015

§ Dotzler, Bernhard [ed.]: Computerkultur Band VI, Babbages Rechenautomate. Ausgewählte Schriften. Vienna [i.a.]: Springer, 1996 XXXXXXXXX

§ Flood, Dorothy G.; Paul D. Coleman: Neuron Numbers and Sizes in Aging Brain: Comparisons of Human, Monkey, and Rodent Data. In: Neurobiology of Aging, Vol. 9, pp.453-463

§ Freud, Sigmund: Das Ich und das Es. Frankfurt a. M.: S. Fischer, 1975 [1923], online on Projekt Gutenberg–DE <http://gutenberg.spiegel.de/buch/das-ich-und-das-es-932/2> last 30.12.2018

§ Haraway, Donna: The Companion Species Manifesto. Dogs, People, and Significant Otherness. Chicago: Prickly Paradigm Press, 2003

§ Haugeland, John: Articial Intelligence. The Very Idea. Cambridge: MIT Press, 1985

§ Lorenz, Konrad: The Comparative Method in Studying Innate Behavior Patterns. Symposia of the Society for Experimental Biology 4, Cambridge: 1950, digitalized by Konrad Lorenz Haus Altenberg <http://klha.at/papers/1950-InnateBehavior.pdf>, last: 30.12.2018

§ Lyotard, Jean-Francois: The Inhuman. Reflections on Time. [L’inhumain. Causeries sur le temps.] Transl. by G.Bennington, R. Bowlby. Stanford: University, 1991 [1988]

§ Margulis, Lynn: The Symbiotic Planet. A New Look at Evolution. London: Phoenix, 1999

§ Markram, Henry [et al]: Dentric Calcium Transients Evoked by Single Back-propagating Action Potentials in Rat Neocortical Pyramidial Neurons. In Journal of Physiology. London: 1995

§ McCulloch, Warren; Walter Pitts. “How We Know Universals the Perception of Auditory and Visual Forms.” In: Bulletin of Mathematical Biophysics 9/3, 1947, pp.127-147

§ Minsky, Marvin: Theory of Neural-Analog Reinforcement Systems and Its Application to the Brain Model Problem. Princeton: University, 1954

§ Minsky, Marvin; Seymour Papert: Perceptrons. Cambridge: MIT Press, 1961

§ Nilsson, Nils J.: The Quest for Artificial intelligence. A History of Ideas and Achievements. Stanfort: University Press, 2009

§ Pfeil, Thomas [et. al]: Is a 4-bit Synaptic Weight Resolution Enough? Constraints on enabling Spike-Timing Dependent Plasticity. In Neuromorphic Hardware. Frontiers in Neuroscience, Vol.6, 2012

§ Plato: “Theaetetus”. In Jowett, Benjamin [ed., transl.]: The Dialogues of Plato. Translated into English. With Analyses and Introductions. Vol 4 [5 vols.], 3rd rev. corr. ed. Oxford: Oxford University Press, 1892. Online version on Online Library of Liberty. <http://oll.libertyfund.org/titles/plato-dialogues-vol-4-parmenides-theaetetus-sophist-statesman-philebus#Plato_0131-04_2182>, last: 31.12.2018.

§ Posner, Michael [ed.]: Foundation of Cognitive Science. Cambridge: MIT Press, 1989

§ Randell, Brian: On Alan Turing and the Origins of Digital Computers. Newcastle upon Tyne: Computing Laboratory University of Newcastle upon Tyne, 1972

§ Rosenblatt, Frank: The Perceptron. A Perceiving and Recognizing Automaton. Buffalo: Cornell Aeronautical Laboratory, 1957

§ —“—: Principles of Neurodynamics. Perceptrons and the Theory of Brain Mechanisms. Ithaca: Cornell University,1961

§ Sloterdijk, Peter: Du Mußt Dein Leben Ändern. Frankfurt a. M.: Surkamp, 2009

§ Turing, Alan: “Intelligent Machinery. A Heretical Theory”. In Copeland, 2004 [1948], pp.410-432

§ —“—: “Lecture on the Automatic Computing Engine”. In: Copeland, 2004 [1947], pp.378-394

§ —“—: “Letter to W. Ross Ashby”., [undated]. On The Turing Archive for the History of Computing <http://www.alanturing.net/turing_ashby/>, last 21.12.2018

Online References

§ Duttons, Tim: Overview of National AI Strategies. On Medium, 2018 <https://medium.com/politics-ai/an-overview-of-national-ai-strategies-2a70ec6edfd>, last: 20.12.2018

§ European Commission: “Human Brain Project Flagship.” <https://ec.europa.eu/digital-single-market/en/human-brain-project>, last: 22.12.2018

§ Grübel, Andreas: F09/F10 Neuromorphic Computing. Ver. 0.6. Heidelberg: Kirchhoff Institut für Physik, 2017 <https://www.physi.uni-heidelberg.de/Einrichtungen/FP/anleitungen/old/F09-10.pdf>, last: 30.12.2018

§ Hart, Sam: Critical Engineering. An Interview with Julien Oliver. On Avant.org, 2014 <http://avant.org/artifact/julian-oliver/>, last 20.12.2018

§ Hassabis, Demis: What we learned in Seoul with AlphaGo. On Google Blog, 2016 <https://blog.google/technology/ai/what-we-learned-in-seoul-with-alphago/>, last: 30.12.2018

§ HBP Neuromorphic Computing Platform Guidebook: About the BrainScaleS Hardware. The Neuromorphic Wafer Module. <https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html>, last: 30:12.2018

§ Horsley, Sir Victor: “Description of the Brain of Mr. Charles Babbage, F.R.S.”, 1907. On archive.org <https://ia600504.us.archive.org/23/items/philtrans01141826/01141826.pdf>, last: 11.11.2018

§ Jee Heun Kahng, Se Young Lee: Google artificial intelligence program beats S. Korean Go pro with 4-1 score. On Reuters, 2016 <https://www.reuters.com/article/us-cyber-latimes/cyber-attack-hits-u-s-newspaper-distribution-idUSKCN1OT01O>, last 30.12.2018

§ Lee Sedol’s international twitter-account <https://twitter.cm/lee_sedol?lang=de>, last: 31.12.2018

§ Maslanka, Chris: The Emotion Machine. London: BBC Radio 3, 2004 <http://web.media.mit.edu/~minsky/BBC3.mp3>, last: 12.12.2018

§ Karlheinz Meier: Neuromorphic Computing. Architectures and Applications. Lecture at Kirchhoff Institut für Physik Heidelberg within the framework within the framework of the seminar The Aesthetics of Neural Networks at HfG KIM by Prof. Matteo Pasquinelli in February 2018 <https://vimeo.com/259920222>, prov. by the author

§ Meier, Karlheinz: Simulieren ohne Computer. Physikalische Modelle des Gehirns. Lecture, 2012 <http://flagship.kip.uni-heidelberg.de/video/20130705_SimulierenOhneComputer/#>

§ Office of the New York City Comptroller Scott M. Stringer: Internet Inequality. Broadband Access in NYC. 2015 <https://comptroller.nyc.gov/wp-content/uploads/documents/Internet_Inequality_UPDATE_September_2015.pdf>, last: 20.12.2018

§ Pasquinelli, Matteo: Machines that Morph Logic. Neural Networks and the Distorted Automation of Intelligence as Statistical Inference. In Glass Bead: “Site 1: Logic Gate. The Politics of the Artifactual Mind.”, 2017 <http://www.glass-bead.org/article/machines-that-morph-logic/?lang=enview>, last: 21.12.2018

§ Perez, Carlos E.: The Many Tribes of Artificial Intelligence. On Medium, 2017: <https://medium.com/intuitionmachine/the-many-tribes-problem-of-artificial-intelligence-ai-1300faba5b60>, last: 04.11.2018

§ Pfeifer, Rolf; Josh C. Bongard: How the Body Shapes the Way We Think. Cambridge: MIT, 2006

§ Schreiber, Korbinian: The Play Pen. Accelerated Embodiment for Accelerated Hardware. Lecture at the Human Brain Project Education Programme: 1st HBP Student Conference. 2017

§ University of Washington: MegaFace and MF2: Million-Scale Face Recognition. <http://megaface.cs.washington.edu/>, last: 30.12.2018

§ Vincent, James: Transgender YouTubers had their videos grabbed to train facial recognition software. The Verge, 2017 <https://www.theverge.com/2017/8/22/16180080/transgender-youtubers-ai-facial-recognition-dataset>, last: 30.12.2018

Table of Figures

§ Fig.1: Photographs of Charles Babbage’s Brain. In Sir Victor Horsley: “Description of the Brain of Mr. Charles Babbage, F.R.S.”, 1908. On archive.org <https://ia600504.us.archive.org/23/items/philtrans01141826/01141826.pdf>, last: 11.11.2018

§ Fig.2: Temporal summation scheme. Neveu, Curtis / Wikimedia Commons, 2011 <https://commons.wikimedia.org/wiki/File:Temporal_summation.JPG>,

last: 30.12.2018

§ Fig.3: Photograph of a High Input Count Analog Neural Network Chip. In. Thomas Pfeil, Tobias C. Potjans [et. al]: Is a 4-bit Synaptic Weight Resolution Enough? Constraints on enabling Spike-Timing Dependent Plasticity. In Neuromorphic Hardware. Frontiers in Neuroscience, Vol.6, 2012

§ Fig.4: 3D-rendering of the BrainScaleS wafer-scale hardware system. HBP Neuromorphic Computing Platform Guidebook: About the BrainScaleS Hardware. The Neuromorphic Wafer Module. <https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html>, last: 30.12.2018

§ Fig.5: List of i/o parameters of the BrainScaleS model. In: Grübel, Andreas: F09/F10 Neuromorphic Computing. Ver. 0.6. Heidelberg: Kirchhoff Institut für Physik, 2017 <https://www.physi.uni-heidelberg.de/Einrichtungen/FP/anleitungen/old/F09-10.pdf>,

last: 30.12.2018

§ Fig.6: Lecture Slide: Comparison of Energy Efficiency between different Computing Techniques and the Human Brain. In: Karlheinz Meier: Simulieren ohne Computer. Physikalische Modelle des Gehirns. Lecture, 2012 <http://flagship.kip.uni-heidelberg.de/video/20130705_SimulierenOhneComputer/#>,

last: 30.12.2018

§ Fig.6: Lecture Slide: Comparison of Time Scales for Biological Processes of intelligent behaviour with simulations on Turin Machines and the physical BrainScaleS model. [ibid.]

[1] Plato: “Theaetetus” in Benjamin Jowett [ed., transl.]: The Dialogues of Plato. Translated into English. With Analyses and Introductions. Vol 4 [5 vols.], 3rd rev. corr. ed. Oxford: Oxford University Press, 1892. Online version on Online Library of Liberty. <http://oll.libertyfund.org/titles/plato-dialogues-vol-4-parmenides-theaetetus-sophist-statesman-philebus#Plato_0131-04_2182>, last: 31.12.2018.

[2] „God’s Excellency arises also from another Cause, viz. Wisdom: whereby his Machine lasts longer, and moves more regularly, than those of any other Artist whatsoever.“ s. Gottfried Wilhelm Leibniz: “Mr. Leibnitz’s Second Paper. Being an Answer to Dr. Clarke’s First Reply.” In: Samuel Clarke: A Collection of Papers, Which passed between the late Learned Mr. Leibnitz, and Dr. Clarke, In the Years 1715 and 1716. London: The Crown at St. Paul’s Church Yard, 1717, p.27.

[3] E.g. “Das Ich ist vom Es nicht scharf getrennt, es fließt nach unten hin mit ihm zusammen. Aber auch das Verdrängte fließt mit dem Es zusammen, ist nur ein Teil von ihm. Das Verdrängte ist nur vom Ich durch die Verdrängungswiderstände scharf geschieden, durch das Es kann es mit ihm kommunizieren.“ s. Sigmund Freud: Das Ich und das Es. Frankfurt a. M.: S. Fischer, 1975 [1923], online on Projekt Gutenberg–DE

<http://gutenberg.spiegel.de/buch/das-ich-und-das-es-932/2> last: 30.12.2018; s. a. Konrad Lorenz’s psychohydraulic instinct model introduced in Konrad Lorenz: The Comparative Method in Studying Innate Behavior Patterns. Symposia of the Society for Experimental Biology 4, Cambridge: 1950, digitalized by Konrad Lorenz Haus Altenberg <http://klha.at/papers/1950-InnateBehavior.pdf>, pp.255ff., last 30.12.2018; and David E. Rumelhart: The Architecture of Mind. A Connectionist Approach. In: Michael Posner [ed.]: Foundation of Cognitive Science. Cambridge: MIT Press, 1989, pp. 133-159.

[4] For more political implications see also this study analysing broadband access in New York: Office of the New York City Comptroller Scott M. Stringer: Internet Inequality. Broadband Access in NYC. 2015

<https://comptroller.nyc.gov/wp-content/uploads/documents/Internet_Inequality _UPDATE_September_2015.pdf>,

last: 20.12.2018.

[5] Julien Oliver, co-author of the Critical Engineering Manifesto describes the us of the term as one example of “children’s book metaphors” introduced to obscure the political substantiality of technology. S. Sam Hart: Critical Engineering. An Interview with Julien Oliver. On Avant.org, 2014 <http://avant.org/artifact/julian-oliver/>, last visited 20.12.2018.

[6] The phrasing follows Dirk Baeckers classification of epochs defined by the significant societal impact of the introduction of new communicative media (1.0 language, 2.0 scripture, 3.0 print, 4.0 electronics) as expounded i.a. in: 4.0 oder Die Lücke die der Rechner lässt. Berlin: Merve, 2018.

[7]The blurriness in all of the mentioned pairs, i.e. the asymmetry in the suggested symmetry of the images is evident in hindsight and crucial at large: The ancient wax tablet itself is an externalisation of a memory task, thus rearranging the importance and role of short-term memorization, be it in operations of accounting, teaching or learning. Leibniz analogy is highly religiously motivated and works best in a non-procedural universe of cycles. Freud’s perspective on the libido introduces a new human quality in close connection to emotional and social behaviour in close material association to the ideas of medieval humorism. Associative argumentation implying technological innovation is bound to attract oddness, especially over time. Its application in hard science (engineering/medicine) is often criticized (Leibniz by Clarke, Freud by his contemporaries) or exposed by critical thought and art — probably because it can be regarded more consequential. Interestingly however, it appears almost a deciding pathway in any form of collaboration between the three to blur the sharpness in hope for further innovation.

[8] The same goes for the German short-version ‘Rechner‘.

[9] s. Tim Duttons: Overview of National AI Strategies. On Medium, 2018

<https://medium.com/politics-ai/an-overview-of-national-ai-strategies-2a70ec6edfd>, last 20.12.2018.

[10] For an overview of the different tribes in artificial intelligence see Pedro Domingos: The Master Algorithm. How the Quest for the Ultimate Learning Machine Will Remake Our World, 20 Basic Books, New York, 2015. As well as Carlos E. Perez: The Many Tribes of Artificial Intelligence. On Medium, 2017:

<https://medium.com/intuitionmachine/the-many-tribes-problem-of-artificial-intelligence-ai-1300faba5b60>, last: 04.11.2018.

[11] Frank Rosenblatt: Principles of Neurodynamics. Perceptrons and the Theory of Brain Mechanisms. Ithaca: Cornell University,1961, p.9.

[12] Ross Ashby: An Introduction to Cybernetics. London: Chapman & Hall, 1957.

[13]Sir Victor Horsley: “Description of the Brain of Mr. Charles Babbage, F.R.S.”, 1908. On archive.org

<https://ia600504.us.archive.org/23/items/philtrans01141826/01141826.pdf>, last 11.11.2018; and Bernhard Dotzler: Operateur des Wissens: Charles Babbage (1791-1871). In [id.] [ed.]: Computerkultur Band VI, Babbages Rechenautomate. Ausgewählte Schriften. Vienna [i.a.]: Springer, 1996.

[14] Jack Copeland: Computable Numbers. A Guide. In [id.] [ed.]: Turing. The Essential. Oxford: University Press, 2004. p.55.

[15] Alan Turing: “Intelligent Machinery. A Heretical Theory”. In ibid. [1948], pp.410-432, p.420.

[16] Marvin Minsky in Chris Maslanka: The Emotion Machine. London: BBC Radio 3, 2004 <http://web.media.mit.edu/~minsky/BBC3.mp3>, last: 12.12.2018.

[17] Marvin Minsky: Theory of Neural-Analog Reinforcement Systems and Its Application to the Brain Model Problem. Princeton: University, 1954.

[18] Matteo Pasquinelli: Machines that Morph Logic. Neural Networks and the Distorted Automation of Intelligence as Statistical Inference. In Glass Bead: “Site 1: Logic Gate. The Politics of the Artifactual Mind.”, 2017

<http://www.glass-bead.org/article/machines-that-morph-logic/?lang=enview>,

last 21.12.2018.

[19] Frank Rosenblatt: The Perceptron. A Perceiving and Recognizing Automaton. Buffalo: Cornell Aeronautical Laboratory, 1957.

[20] Warren McCulloch and Walter Pitts. “How We Know Universals the Perception of Auditory and Visual Forms.” In: Bulletin of Mathematical Biophysics 9/3, 1947,

pp.127-147.

[21] Marvin Minsky, Seymour Papert: Perceptrons. Cambridge: MIT Press, 1961. For a detailed critique of the dispute and its implications see: Pasquinelli, 2017.

[22] Nils J. Nilsson: The Quest for Artificial intelligence. A History of Ideas and Achievements. Stanfort: University Press, 2009, p.408.

[23] John Haugeland: Articial Intelligence. The Very Idea. Cambridge: MIT Press, 1985, p.112.

[24] Turing was indeed one of the earliest researchers in the field of AI. His term ‘machine intelligence’ has fallen behind ’artificial intelligence’ coined by Minsky et al the Dartmouth College Conference in 1956, despite its more suitable referentiality.

[25] Alan Turing: “Lecture on the Automatic Computing Engine”. In: Copeland, 2004 [1947], pp.378-394, p.393.

[26] Alan Turing: “Proposed Electronic Calculator.”, 1945. On The Turing Archive for the History of Computing.

<http://www.alanturing.net/proposed_electronic_calculator/>, last: 31.12.2018, p.16.

[27] Alan Turing: “Letter to W. Ross Ashby”., [undated]. On The Turing Archive for the History of Computing <http://www.alanturing.net/turing_ashby/>, last 21.12.2018.

[28] ibid.

[29] Stan Frankel points out that the fundamental conception of the computer architecture is owed to Turing in van Neumanns own understanding. See Brian Randell: On Alan Turing and the Origins of Digital Computers. Newcastle upon Tyne: Computing Laboratory University of Newcastle upon Tyne, 1972.

[30] Alan Turing: “Lecture on the Automatic Computing Engine”. In: Copeland, 2004, p.375.

[31] Rosenblatt: 1961, p.vii.

[32] European Commission: “Human Brain Project Flagship”., <https://ec.europa.eu/digital-single-market/en/human-brain-project>, last 22.12.2018.

[33] The full list reads: SP1 – Mouse Brain Organisation, SP2 – Human Brain Organisation, SP3 – Systems and Cognitive Neuroscience, SP4 – Theoretical Neuroscience, SP5 – Neuroinformatics Platform, SP6 – Brain Simulation Platform, SP7 – High-Performance Analytics and Computing Platform, SP8 – Medical Informatics Platform, SP9 – Neuromorphic Computing Platform, SP10 – Neurorobotics Platform, SP11 – Management & Coordination, SP12 – Ethics and Society. Short introductions can be found here: Human Brain Project <https://www.humanbrainproject.eu/en/about/project-structure/subprojects/#SP9>, last: 22.12.2018.

[34] Henry Markram, P. Johannes Helm [et al]: Dentric Calcium Transients Evoked by Single Back-propagating Action Potentials in Rat Neocortical Pyramidial Neurons. In Journal of Physiology. London: 1995, Vol. 485, pp.1-20.

[35] s. above; Alan Turing: “Lecture on the Automatic Computing Engine”. In: Copeland, 2004, p.375.

[38] This turns Turing’s argument, seemingly adressed against an overvaluation of the role of electicity, upside down: “From the point of view of the mathematician the property of being digital should be of greater interest than that of being electronic. That it is electronic is certainly important because these machines owe their high speed to this, and without the speed it is doubtful if financial support for their construction would be forthcoming. But this is virtually all that there is to be said on that subject.“, Alan Turing: “Lecture on the Automatic Computing Engine”. In Copeland, 2004. p.378.

[37] Karlheinz Meier: Neuromorphic Computing. Architectures and Applications. Lecture at Kirchhoff Institut für Physik Heidelberg organized and documented by the author within the framework within the framework of the seminar The Aesthetics of Neural Networks at HfG KIM by Prof. Matteo Pasquinelli in February 2018 <https://vimeo.com/259920222> 0:15:35.

[38] Curtis Neveu / Wikimedia Commons: Temporal summation. 2011

<https://commons.wikimedia.org/wiki/File:Temporal_summation.JPG>, last: 30.12.2018. S. also Meier, 2018 from [0:21:30].

[39] Meier, 2018: [0:26:30].

[40] s. Markus Diesmann: Brain-scale Neuronal Network

Simulations on K. Pp.83-90. In International Symposium for Next-Generation Integrated Simulation of Living Matter: Proceedings of the 4th Biosupercomputing Symposium. Tokyo: Ministry of Education, Culture, Sports, Science and Technology (MEXT), 2012; and Meier, 2018: [0:30:40].

[41] Comp. Dorothy G. Flood, Paul D. Coleman: Neuron Numbers and Sizes in Aging Brain: Comparisons of Human, Monkey, and Rodent Data. In: Neurobiology of Aging, Vol. 9, pp.453-463; and John E. Dowling: Neurons and Networks. An Introduction to Neuroscience. Cambridge: Harward University, 1992, p.32.

[42] The term has also been adopted to Web- and App-Design.

[43] see. Definitions on Techtarget

<https://searchnetworking.techtarget.com/definition/graceful-degradation>,

last 30.12.2018; and Spektrum

https://www.spektrum.de/lexikon/neurowissenschaft/graceful-degradation/4925>,

last: 30.12.2018.

[44] Thomas Pfeil, Tobias C. Potjans [et. al]: Is a 4-bit Synaptic Weight Resolution Enough? Constraints on enabling Spike-Timing Dependent Plasticity. In Neuromorphic Hardware. Frontiers in Neuroscience, Vol.6, 2012. Full text online:

<https://www.frontiersin.org/articles/10.3389/fnins.2012.00090/full>, last 30.12.2018.

[45] HBP Neuromorphic Computing Platform Guidebook: About the BrainScaleS Hardware. The Neuromorphic Wafer Module.

<https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html>, last: 30.12.2018.

[46] Andreas Grübel: F09/F10 Neuromorphic Computing. Ver. 0.6. Heidelberg: Kirchhoff Institut für Physik, 2017

<https://www.physi.uni-heidelberg.de/Einrichtungen/FP/anleitungen/old/F09-10.pdf>, last: 30.12.2018.

[47] In fact it will probably be more likely to build a more conclusive representation of inects, moluscans, rodents and birds(!). Comp.: List of Animals by Number of Neurons. On Wikipedia.org

<https://en.wikipedia.org/wiki/List_of_animals_by_number_of_neurons#cite_note-55>, last: 30.12.2018.

[48] Meier: 2018 [14:44].

[49] Karlheinz Meier: Simulieren ohne Computer. Physikalische Modelle des Gehirns. Lecture, 2012

<http://flagship.kip.uni-heidelberg.de/video/20130705_SimulierenOhneComputer/#>, last: 30.12.2018.

[50] Meier: 2018 [1:11:15].

[51] Meier: 2012.

[52] Meier: 2018 [55:55].

[53]Korbinian Schreiber: The Play Pen. Accelerated Embodiment for Accelerated Hardware. Lecture at the Human Brain Project Education Programme: 1st HBP Student Conference. 2017

<https://www.youtube.com/watch?v=FC3nCpcpWbs>, last: 30.12.2018.

[54] Karlheinz Meier passed away in October 2018.

[55] Alan Turing in Copeland: 2004, p.420

[56] The dataset is currently not available in the USA due to federal funding priorities: <https://www.nist.gov/>, last: 30.12.2018

[57] University of Washington: MegaFace and MF2: Million-Scale Face Recognition.

<http://megaface.cs.washington.edu/>, last: 30.12.2018.

[58] The Face Aging Group: HRT Transgender Dataset. (no longer available), University of North Carolina Wilmington <https://www.faceaginggroup.com/hrt-transgender-dataset/>, last: 30.12.2018

[59] See also this discussion of the possibilities to generate datasets from online available data and the socio political implications between with quotes by artist Adam Harvey – James Vincent: Transgender YouTubers had their videos grabbed to train facial recognition software. The Verge, 2017

<https://www.theverge.com/2017/8/22/16180080/transgender-youtubers-ai-facial-recognition-dataset>, last: 30.12.2018.

[60] Alan Turing: “Intelligent Machinery”. In Copeland: 2004, p.420.

[61] Rolf Pfeifer, Josh C. Bongard: How the Body Shapes the Way We Think. Cambridge: MIT, 2006, p.25.

[62]Ibid. exemplary: p.61-89. In reference to the analogies mentioned in the introductory part of this text, a fitting quote from the foreword to the book by Rodney Brooks (director oft he MIT Artificial Intelligence Laboratory 1997-2007) mentions the many assumptions drawn about the brain itself through the imagery of technical innovations: “Ever since the human brain has come to be considered as the seat of our thought, desires, and dreams, it has been compared to the most advanced technology possessed by mankind. In my own lifetime I have seen popular “complexity” metaphors for the brain evolve.When I was a young child the brain was likened to an electromagnetic telephone switching network. Then it became an electronic digital computer. Then a massively parallel digital computer. And delightfully, in April 2002, someone in a lecture audience asked me whether the brain could be “just like the world wide web.”

[63]Comp. Donna Haraway: The Companion Species Manifesto. Dogs, People, and Significant Otherness. Chicago: Prickly Paradigm Press, 2003; or Lynn Margulis: The Symbiotic Planet. A New Look at Evolution. London: Phoenix, 1999.

[64] S. Peter Sloterdijk: Du Mußt Dein Leben Ändern. Frankfurt a. M.: Surkamp, 2009.

[65] Demis Hassabis: What we learned in Seoul with AlphaGo. On Google Blog, 2016 <https://blog.google/technology/ai/what-we-learned-in-seoul-with-alphago/>,

last: 30.12.2018.

[66] Jee Heun Kahng, Se Young Lee: Google artificial intelligence program beats S. Korean Go pro with 4-1 score. On Reuters, 2016

<https://www.reuters.com/article/us-cyber-latimes/cyber-attack-hits-u-s-newspaper-distribution-idUSKCN1OT01O>, last: 30.12.2018.

[67] Jean-Francois Lyotard: “Can Thought go on without a Body?“ In [id].: The Inhuman. Reflections on Time. Transl. by G.Bennington, R. Bowlby. Stanford: University, 1991.