An essay by Adam Harvey for Umbau journal.

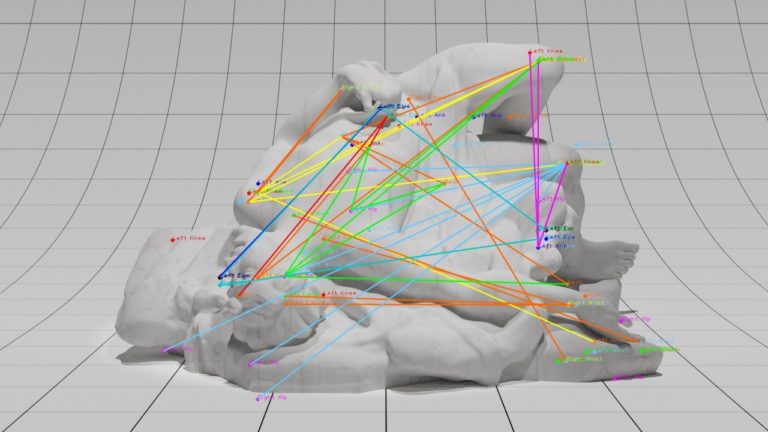

“Becoming training data is political, especially when that data is biometric. But resistance to militarised face recognition and citywide mass surveillance can only happen at a collective level. At a personal level, the dynamics and attacks that were once possible to defeat the Viola-Jones Haar Cascade algorithm are no longer relevant. Neural networks are anti-fragile. Attacking makes them stronger. So-called adversarial attacks are rarely adversarial in nature. Most often they are used to fortify a neural network. In the new optical regime of computer vision every image is a weight, every face is a bias, and every body is a commodity in a global information supply chain.”

Read more → umbau.hfg-karlsruhe.de